We Built an Easier Way to Connect MCP Servers to OpenAI's Agent Platform

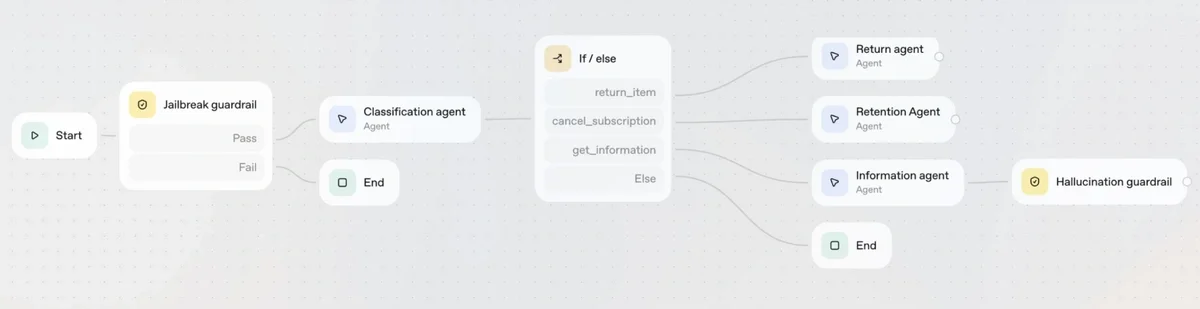

This week, OpenAI introduced its new agent-building platform, featuring AgentKit and Agent Builder. Agent Builder provides a canvas, similar to Zapier and n8n, where users can draw control flows and drag-and-drop components to build automated workflows.

This tool makes creating an agent yourself relatively simple using OpenAI’s components, which saves developer time. It enables you to focus on building workflow logic while benefiting from built-in guardrails and evals for robustness testing, to ensure consistency and improved performance.

Whether you prefer to draw it or write code to automate your workflow, OpenAI’s tools make MCP servers easy to integrate.

In this post, I show you how to use MCPTotal (mcptotal.io) to further simplify the integration of MCP servers, while safely isolating your tools and gaining the advantage of security logs and audits.

Creating and running MCP servers is that simple

You need to run MCP servers to connect them to the workflow.

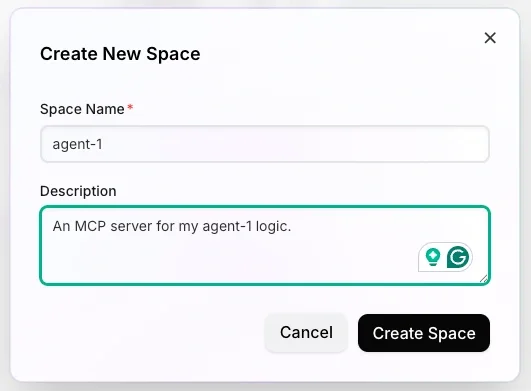

With MCPTotal, you sign up for free and can then create a space on the Spaces page to run the MCP servers for your agent.

A space is an aggregation of MCP tools, running as an MCP server endpoint in a cloud-hosted, isolated container. You add the MCP servers for your agent workflow to the space. Then connect it to any MCP-enabled AI client using the space’s endpoint URL.

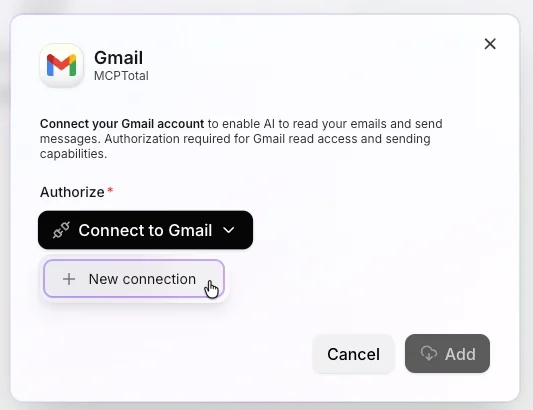

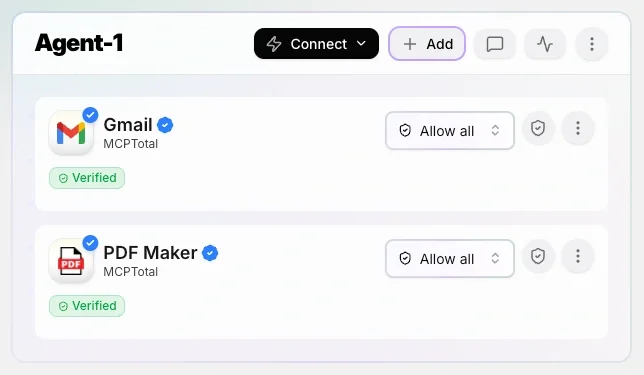

To see how this works, add the Gmail MCP server and authorize it to access your inbox, so your agent can read and write emails.

Add PDF Maker too, so your agent can create PDFs, as this server knows the markdown language.

And, the space is ready to use.

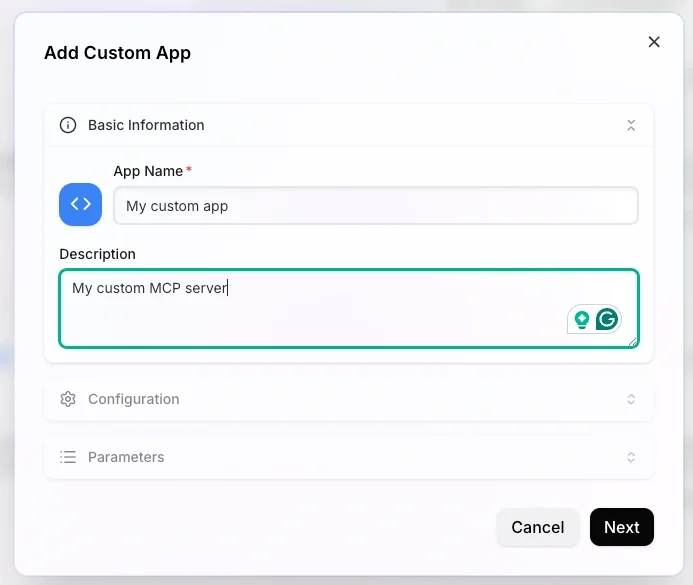

In addition to the MCPTotal catalog of MCP servers, you can run any MCP server by adding a custom Python or Node package (uvx/npx) or a Docker image, using the Add Custom App card in the Catalog page.

Connecting the MCP server

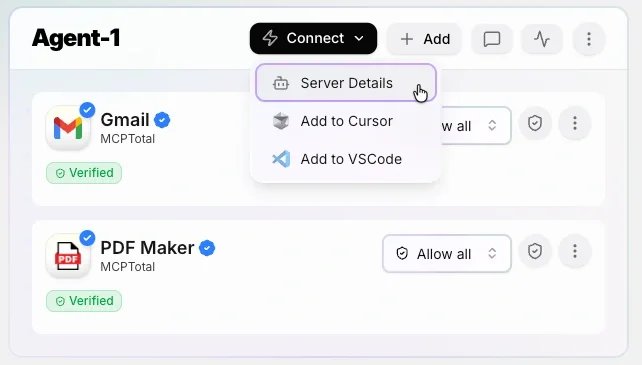

On the MCPTotal Spaces page, click Connect to get the space’s MCP server endpoint URL. This URL gives your AI agent access to all the tools in the space (Gmail and PDF Maker).

OpenAI’s SDKs don’t support OAuth for MCP directly. However, in some cases, they accept an authorization bearer token. But in other cases, they don’t.

Fortunately, MCPTotal supports several protocols and authentication methods for connecting to a space’s MCP server. While OpenAI’s documentation uses SSE, our tests show that “Streamable HTTP” also works reasonably well.

Using OpenAI Client Response API with MCP

If you’re using OpenAI’s SDK, you can extend it to use MCP servers by adding a 'tools' dictionary to configure it. Here's a Python snippet that does that:

1 from openai import OpenAI

2 client = OpenAI()

1 resp = await client.responses.create({

2 "model": "gpt-5",

3 "tools": [

4 {

5 "type": "mcp",

6 "server_label": "agent-1-mcp",

7 "server_description": "An MCP server with both Gmail and PDF Maker ready.",

8 "server_url": "https://mcp.mcptotal.io/mcp-zhjaz2lop3fk5htdcf7k/mcp?key=jxlJOXdyPDvXf8ByODb8",

9 "require_approval": "never",

10 },

11 ],

12 "input": "Create a pdf saying 'hello world' and send it to johndoe@gmail.com, figure out subject and other details on your own please.",

13 })

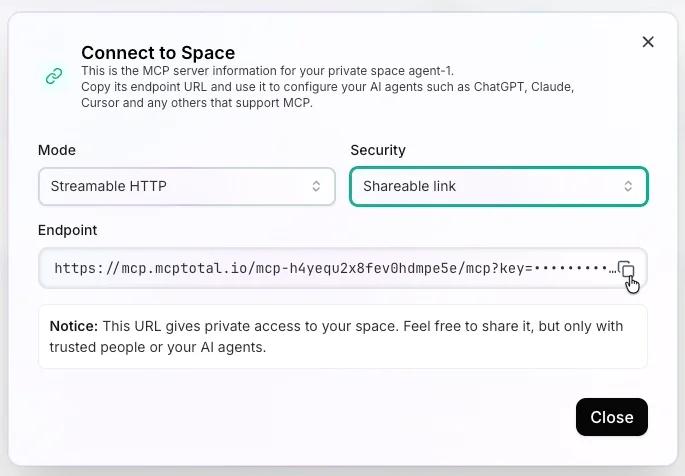

See how you use a full URL for the MCP server without any authentication. This is because the OpenAI SDK doesn't support authentication here, I even peeked at the code, let me know if I missed something. Using MCPTotal, the URL contains the secret as a query parameter.

To use this code, add your server address to "server_url".

Note that by setting “require_approval” as “never”, your AI agent can consume the MCP server functionality without prompting for approval.

AgentKit Integration for MCP

So, how do you set up the new AgentKit? You can get into all the details in OpenAI’s openai-agents-python example, but for this post, I'll keep things simple.

This code shows a complete example of how to use the Pythonic Agent class integrated with your MCP server space. This time, you can safely use the Streamable HTTP protocol and an HTTP bearer token, as you see in line 32, so the connection is more secure.

1 import asyncio

2 import os

3 import shutil

4 import subprocess

5 import time

6 from typing import Any

7

8 from agents import Agent, Runner, gen_trace_id, trace

9 from agents.mcp import MCPServer, MCPServerStreamableHttp

10 from agents.model_settings import ModelSettings

11

12

13 async def run(mcp_server: MCPServer):

14 agent = Agent(

15 name="Assistant",

16 instructions="Use the tools to answer the questions.",

17 mcp_servers=[mcp_server],

18 model_settings=ModelSettings(tool_choice="required"),

19 )

20

21 message = "send an email to nir.haas@piiano.com saying hello from Dabah and agentkit"

22 print(f"Running: {message}")

23 result = await Runner.run(starting_agent=agent, input=message)

24 print(result.final_output)

25

26

27 async def main():

28 async with MCPServerStreamableHttp(

29 name="Streamable HTTP Python Server",

30 params={{

31 "url": "https://mcp.mcptotal.io/mcp-a0keutiwgnb32kjgykwx/mcp",

32 "headers": {"Authorization": "Bearer n2Y0khGnWmaorFbLwopO"}

33 }},

34 ) as server:

35 trace_id = gen_trace_id()

36 with trace(workflow_name="Streamable HTTP Example", trace_id=trace_id):

37 print(f"View trace: https://platform.openai.com/traces/trace?trace_id={trace_id}\n")

38 await run(server)

39

40 asyncio.run(main())

A few notes on using MCP with OpenAI

- The GPT model may ask questions to complete a request. To minimize or avoid this, give the agent a detailed prompt. Err on the side of over-specifying.

- While chatgpt.com supports MCPs working with files, the SDKs (both Chat Completion and AgentKit) don't work well with MCP servers that use files as resources.

- Don't forget to set your API key environment variable. For example, in terminal, it is this command:

export OPENAI_API_KEY="<key here>" - OpenAI's documentation warns that connecting to a malicious server can cause harm, as it may try to steal data or trick the agent into performing unintended actions. Make sure you only connect to trusted servers. We have a feature to block prompt injections coming soon.

- Some SDKs support authentication, and others don't. Use the HTTP header when possible.

Agent Builder Integration for MCP

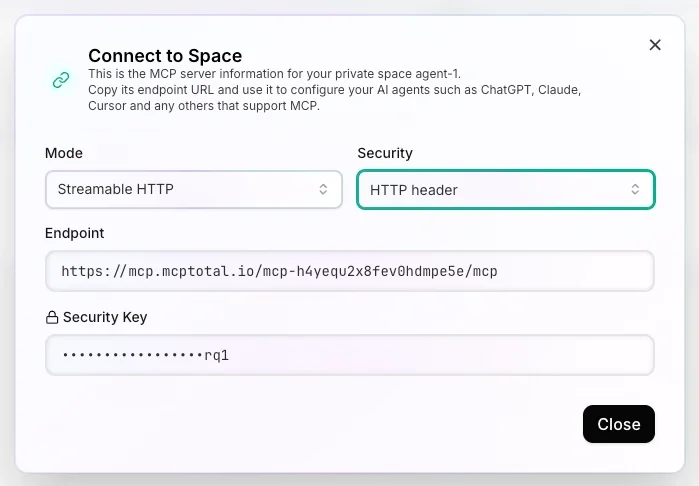

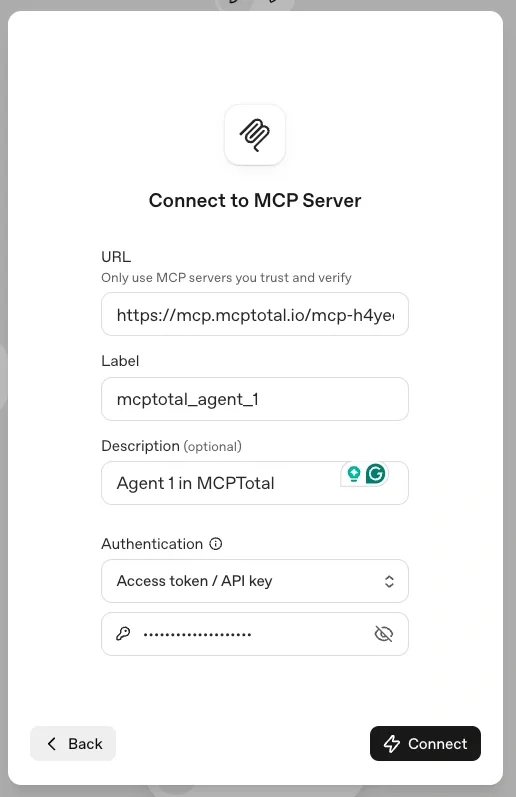

When using the builder dashboard with an MCP component, you must use an access token for authentication.

To connect it, select Streamable HTTP in the space's Connect to dialog. Then, under Security, choose HTTP header, and a key displays; this key is used as the HTTP header to authorize access to your server.

You now have a matching pair of URL and secret. Paste these into the Agent Builder MCP configuration dialog. Using this approach is more secure, as it uses an HTTP header to provide the access token to your MCP server in MCPTotal.

Summary

MCPTotal enables you to host various tools (MCP servers) and securely expose them to OpenAI’s agent platform with appropriate URLs and credentials. MCPTotal lets you run custom MCPs, so you avoid the hassle of deploying and managing them yourself. The MCPTotal architecture provides isolated (single-tenant) and sandboxed server operations, along with auditing and logging for security and diagnostics.

For further details on the security architecture, check out the MCPTotal's Security Overview blog post.