MCPTotal's Security Overview

This blog post details the robust and secure infrastructure we developed to host Managed MCP servers for our customers via mcptotal.io. Our primary objective was to provide not only MCP managed services but also the highest possible level of security.

We prioritize security due to the inherent sensitivity and implications of integrating AI applications with third-party applications such as Gmail, Slack, and GitHub. Connecting everything to AI clients can be daunting, but our aim is to ensure safety and trust. We are also deeply committed to security, because that’s where our passion lies, and we’ve been doing this for over 20 years.

Today, there are plenty of open-source MCP servers out there, but a lot of it feels like marketing noise. Even some of Anthropic’s own servers are just demos, because they came up with a few to promote MCP, and then they decided to abandon them in spite of the protocol gaining massive traction.

Anyway, when we tried to connect to our WhatsApp account using MCP, we discovered several projects that claimed to provide this functionality. But practically, each of these projects was either non-functional, offered limited features, or suffered from extremely long loading times, sometimes up to 10 minutes.

That’s when we realized:

If MCP servers are going to be needed to achieve things with MCP, then naturally, they will need to be both accessible to everyone and secure enough that we (and our customers) can actually trust them. Therefore, it's not enough to secure MCP if running and using it is difficult. This is especially true for non-developers, and even for developers, it can be tedious.

The threats of running untrusted MCPs and plugging your third-party applications

So you download this shiny new MCP server you spotted in a newsletter, and you’re hyped to run it, ready to hook it up to your favorite AI chat or Cursor, or similar applications.

And then you ask yourself:

-

What are they actually doing with my access token?

-

Could my data be leaking somewhere without me knowing?

-

Is this server vulnerable to some dumb, basic attack that would get it compromised?

-

Are its dependencies even maintained—or are we just trusting some abandoned package from 2018?

-

Is it secretly phoning home or logging things I never agreed to?

-

Now that it has full access, is it touching parts of my account I didn’t intend?

-

And last but not least, if I run it locally… is it malicious? Is it sniffing for credentials, wallets, API keys—basically anything it can grab on my computer? yaks

Thinking about all these risks of using AI to boost productivity, we realized there had to be a better way. So we started designing a secure model for running servers—fully isolated and safe.

MCP server deployment model

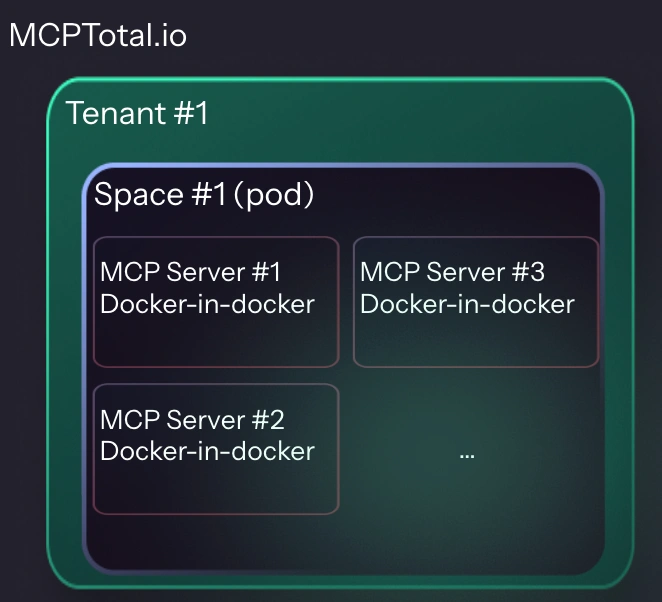

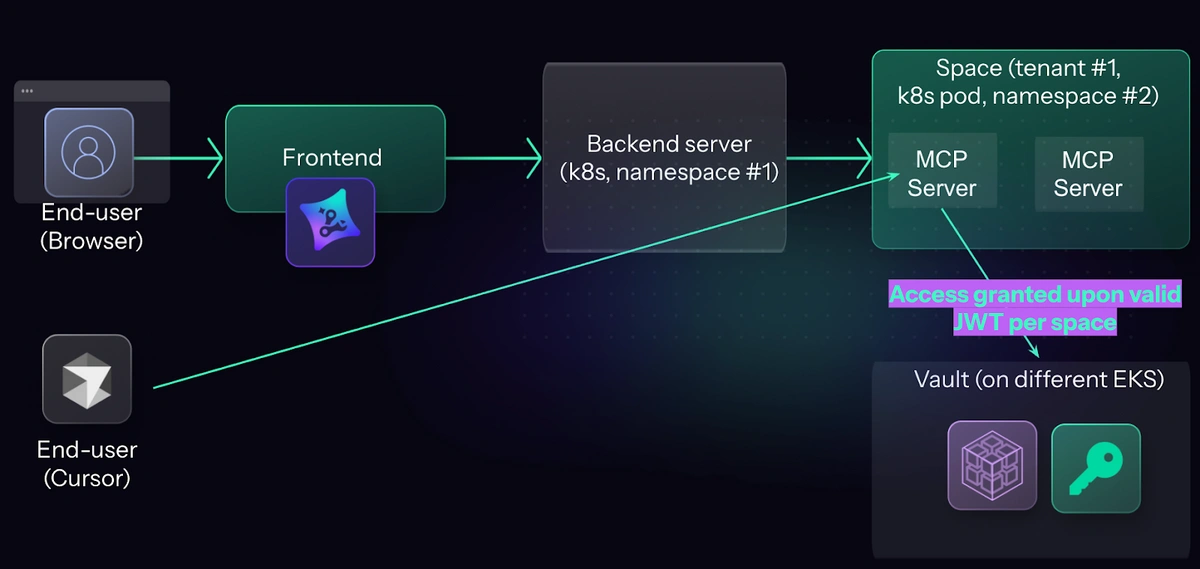

Our product introduces Spaces. Each space serves as an MCP server endpoint with a unique URL, accessible to any client.

In other words, we host MCP servers for our users so they don’t need to deal with them. We designed them to simplify the setup of MCP servers. Also, instead of managing numerous URLs (one per server) in the client, they can now use a single URL that aggregates several MCP servers underneath. This enhances the user experience, and a static endpoint address allows users to add or delete servers within the same space without altering client connections and configurations. So instead of going to Cursor for example to change a URL by editing the JSON file, they can add the space’s endpoint URL once, and from there, everything happens in the cloud, easy.

As an interesting side note: Aggregating numerous MCP servers under a single visible MCP server could lead to client-side issues such as an excessive number of listed tools or increased client context strain. We are addressing this challenge through dynamic tool selection and description expansion.

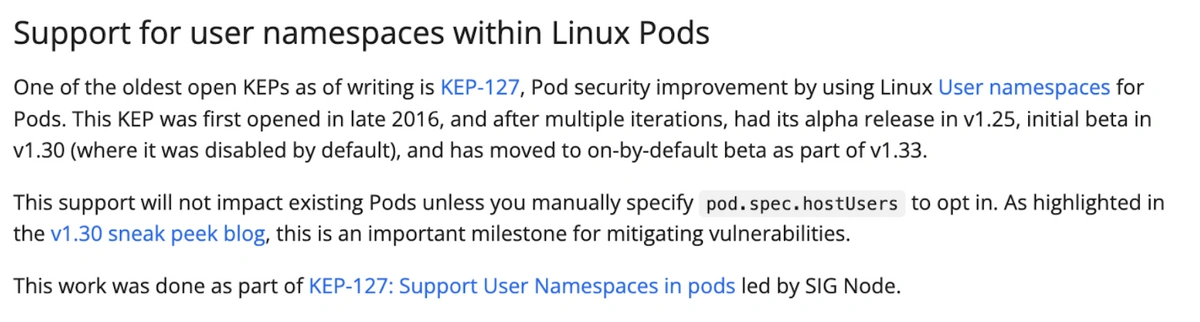

Technically, each space is a virtual server that “hosts” multiple real MCP servers. This is done by the routing server that knows how to relay the traffic to the right server (more on that below). And each MCP server (process) runs in a Docker-in-a-Docker setup, and most importantly they run rootless and unprivileged. In essence, this creates a sandboxed boundary between individual servers within the same space. Consequently, every customer (tenant) operates within its own pod, over K8s.

To keep resource usage efficient, we decided not to spin a full Docker container per MCP server. Instead, we took advantage of the newly supported Docker-in-Docker on AWS. All servers still run within the same space, but by principle we still never trust them, therefore we further isolate them from each other.

This matters because MCP servers often hold credentials for third-party services and may write data locally for caching purposes. Without isolation, that data could be exposed or misused. Our approach is to build an MCP trust layer around the idea of zero trust for anything we run.

You can read more about its security implications in this blog post.

Filesystem isolation and sharing

Given our ‘never trust’ principle, we mount the file system per pod and process, so the MCP servers can’t access each other's files, thanks to docker-in-docker architecture. Today, we rely on EBS to store everything encrypted, which is fairly standard.

To enable efficient file sharing between tools within our MCP servers, we implemented a shared directory mechanism. This approach, where a /shared directory is mapped across all servers in a pod, was chosen to avoid the more expensive and network-intensive process of sending base64 encoded files back and forth to the LLM.

This mechanism allows servers to read and write files to the shared directory, and critically, to pass file paths to the LLM (through the result of tool call). For instance, a media file received from Slack can be saved to the shared directory, and then the Gmail MCP server can access it via its internal shared path to use as an email attachment. This design improves network efficiency and reduces strain on model contexts.

Authenticating and protecting customer keys

Our product supports a few user authentication schemes:

-

OAuth, which was added to the MCP standard recently

-

Simple http header with the key (without OAuth, so we enroll some random key)

-

URL containing the secret for clients without customized HTTP headers support

And then a fourth type of keys that we have to safeguard because we support customer Single Sign-On (SSO). Customers can leverage our SSO to connect to their third-party applications, such as their Gmail account. This process involves a full OAuth flow with Google to gain access to their account with the user’s consent. Otherwise, it might be very hard and require a length process to gain automated access to some accounts

We must then securely store these keys, ensuring that only the respective MCP server can utilize them. This also highlights the importance of maintaining isolation per MCP server within the same environment.

Vaulting secrets

One of our strongest differentiators is our deep expertise in data security. Long before mcptotal, for four years we had been building a secure database ‘vault’ designed to enforce strict access controls. Its killer-feature capability is simple yet powerful: every request for data is verified against the identity of the requestor, and only then access is granted. Accessing the data and the access controls were glued together, so there’s no way to bypass it if it’s configured correctly.

Security by design is the philosophy that drives our architecture today. Unfortunately, everybody is used to safeguard production environment keys in a vault, but not the customer ones. And it’s not enough, this gap makes all the difference in a data breach.

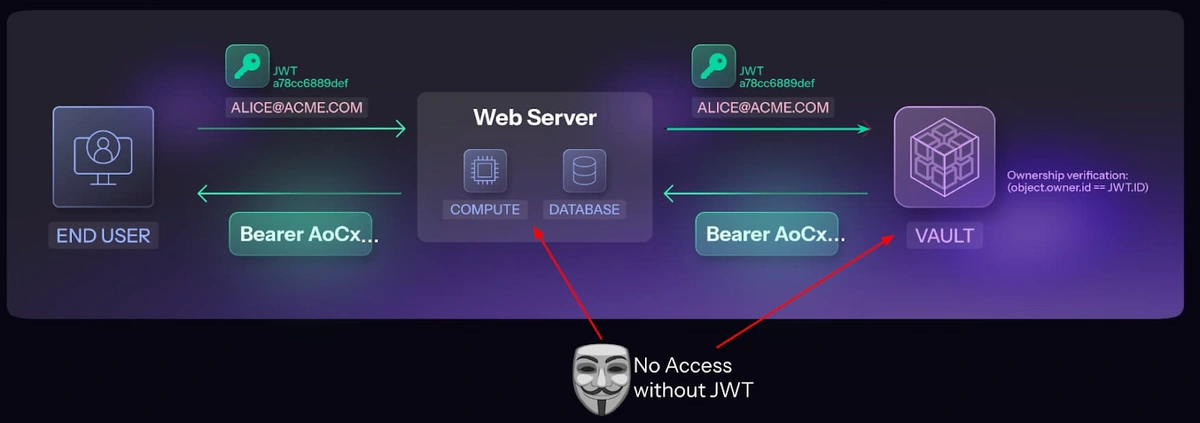

Let’s go zoom in on the vault and backend architecture with a simplified diagram to see what it means regarding safeguarding credentials. Technically, we lock data in the vault using the user’s login JWT. This way anybody benign or malicious lurking in the backend cannot just access any data in the vault, unless they prove they are acting as someone.

Normally the backend server will try to enforce the access controls by itself. Meaning if it’s compromised, it’s game over for all the customer secrets in the DB. Not in our case.

Basically, we reduced the threat of stealing all credentials at once after the backend is compromised to only stealing credentials of the running user at worst.

If the attacker wants to steal all keys, then they will have to do one of two things:

-

Sit down and wait for all users to log in to the front end to run control operations. Technically hooking code and intercepting traffic without crashing everything in production in runtime is very hard to do and time consuming to do.

-

Hack to our login box, in this case, auth0, to get keys on behalf of all users. Making it one big extra step to the bad guys.

Either way the ultimate goal in defeating attackers is by making them spend ever more time and resources.

MCP data flow

For inbound routing, we utilize Istio to manage all traffic to our MCP servers. Each space is assigned a unique URL ID, which allows us to direct traffic to the appropriate pod. We have configured the pods to restrict inter-pod communication and LAN access, permitting only internet connectivity.

Summary

MCP isn’t just an innovation, it's an innovation's multiplier, it boosts productivity beyond just using LLMs for both users and AI agents.

While we comply with SOC2, which is straightforward today. It doesn’t necessarily equate to stronger security, therefore we didn’t even mention it here.

In this blog we showed that we go beyond just building infrastructure to run MCP servers, and we actually build everything with lots of thought and care, as our focus has always been on security by design. That means protecting user data as it flows through our systems and, in some cases, resides in their own MCP server instances.

We’re also strong believers in community collaboration. If you’re a researcher who discovers a vulnerability in our product, we’d be glad to recognize your efforts with a reward. Just reach out at security@mcptotal.ai.